Utility Debugger

Before you start

This manual page is dedicated to working with Utility Debugger. This assumes you have set up your game to make it provide data snapshots to the tool. There are two ways to do this:

-

With files - the game serializes snapshots with debug data to the disk

-

Live debugging - the game communicates with the tool via network and provides the data directly

For the purpose of the debugger description, we assume the first case, because the source of the debug data does not affect the workflow with the GUI tool.

The data acquisition step applies to all debugging tools. You need to enable debugging of the respective AIEntity that uses UtilityReasoner.

In order to see how to set up your game so it produces snapshots, please refer to Debugging Setup: Tutorial.

Load the data

Once you have the debug file, load it using the dashboard (Overview). These files will typically come with the extension *.gdi (grail debug info).

If the file contains debug information for Utility AI, then you can view it by switching to the Utility Debugger tab.

Please note, that the file may contain more debug information, e.g. full snapshot with multiple entities and their reasoners.

The provided tool enables you to view what you what to focus on at the moment.

Debugging Utility AI

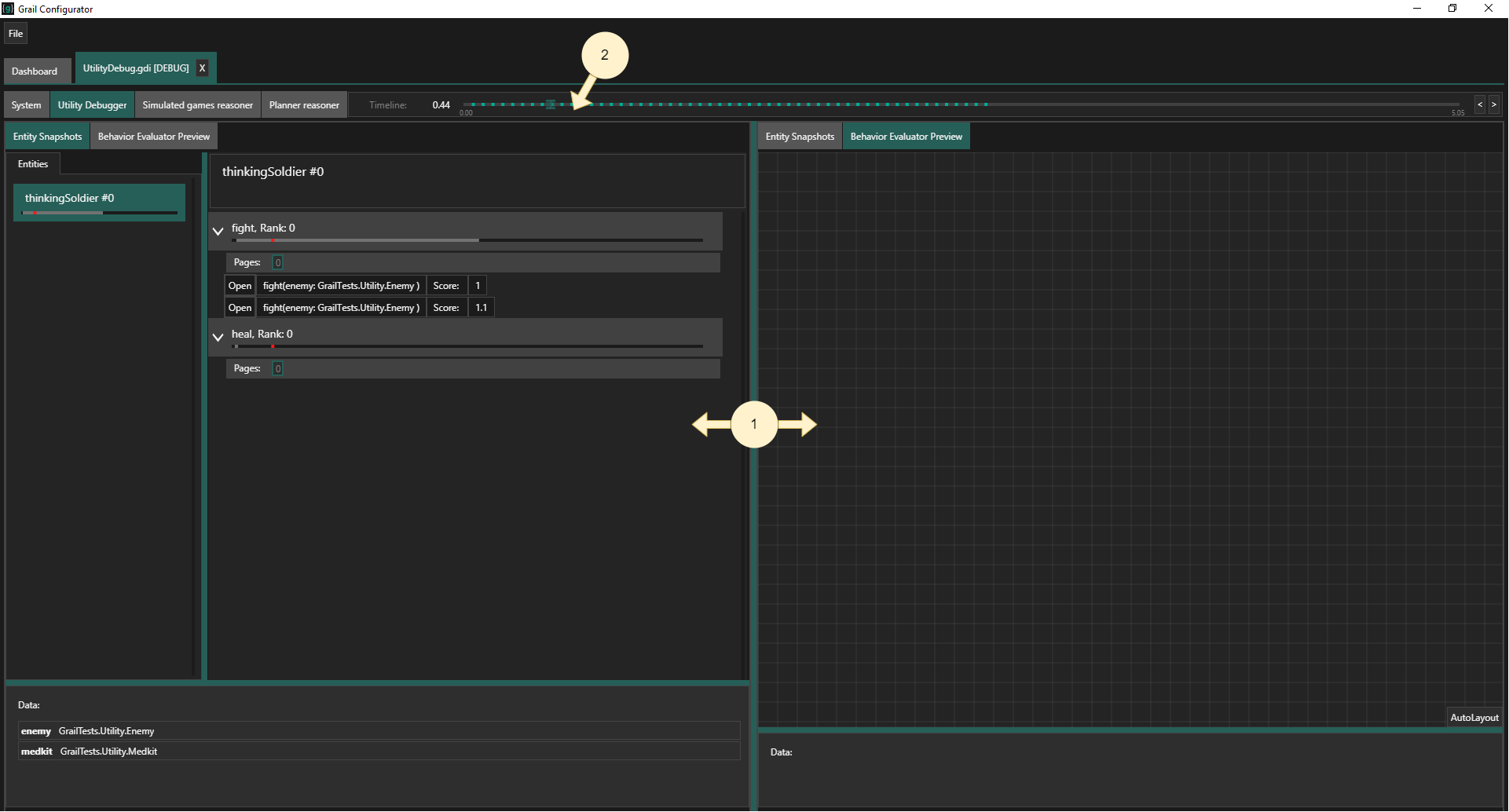

Figure 1 shows the Utility Debugger tab:

(1) - Main view columns

Both colums of this view can independently switched between Entity Snapshots and Behavior Evaluator Preview views.

(2) - Timeline - this is the timeline of events that happened during the game while debugging was on.

By manipulating the timeline you can visualize the changes to AI state over time.

The fragments marked by dots in a lighter color denote timestamps, in which the data is available.

Click them or use the slider to set a particular time.

In Figure 1 shown above, all data was gathered when the game time was equal to 0.44.

Entity Snapshots

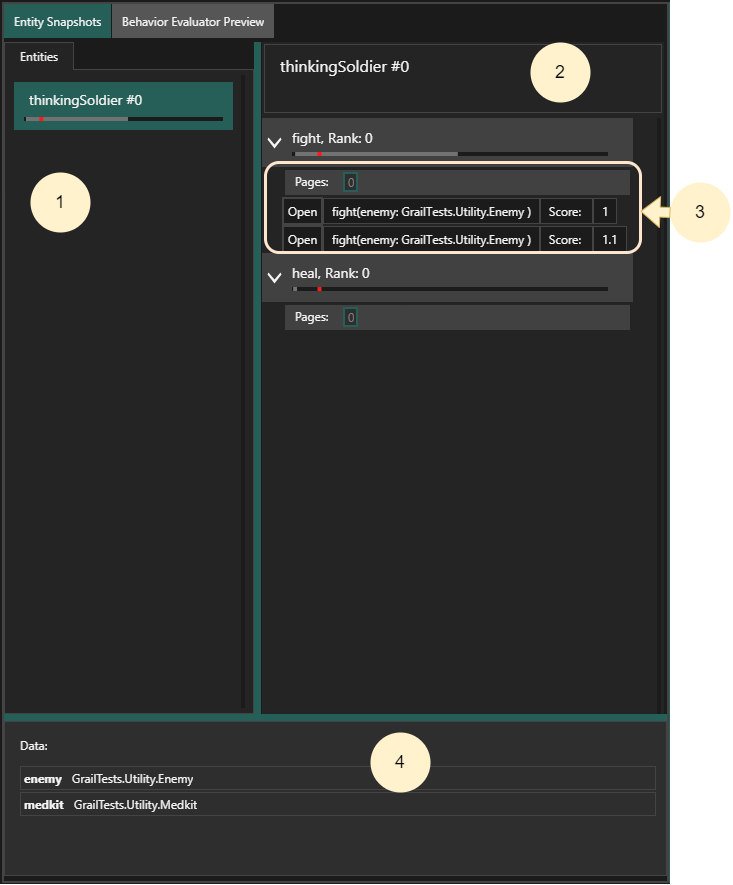

(1) - Entity list - on this list you can see all entities that use Utility as their reasoner and are present in the currently open debug file. This is a common tab for all debug views. Under each entity, you can see black bar with certain fragments highlighted. The bar reflects the timeline. The highlighted intervals show the time intervals when the entity was actively selecting behaviors. The red dot marks the current timestamp.

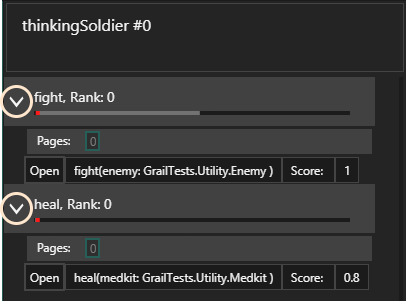

(2) - Action space - this view lists blueprints of behaviors that are used in the Utility reasoner. Clicking the down arrow shows all instances of behaviors based on the particular blueprint.

(3) - Behavior instances - distinct instantiations of behaviors of the same type.

Each instance may have different parameters.

Naturally, all of the instances based on the same blueprint share the same evaluation mechanism.

Clicking on Open button on a behavior instance will cause the column to switch to Behavior Evaluator Preview for the behavior.

Note that, apart from names, you can see text representation of behaviors' parameters. If you want them to appear here, make sure they are serialized (Debugging Setup: Tutorial). In this example, the parameters were written to a blackboard returned by context producing function for the behavior blueprint.

(4) - Selected entity’s blackboard data - in this panel you can see all the data stored on the selected entity’s private blackboard.

Behavior Evaluator Preview

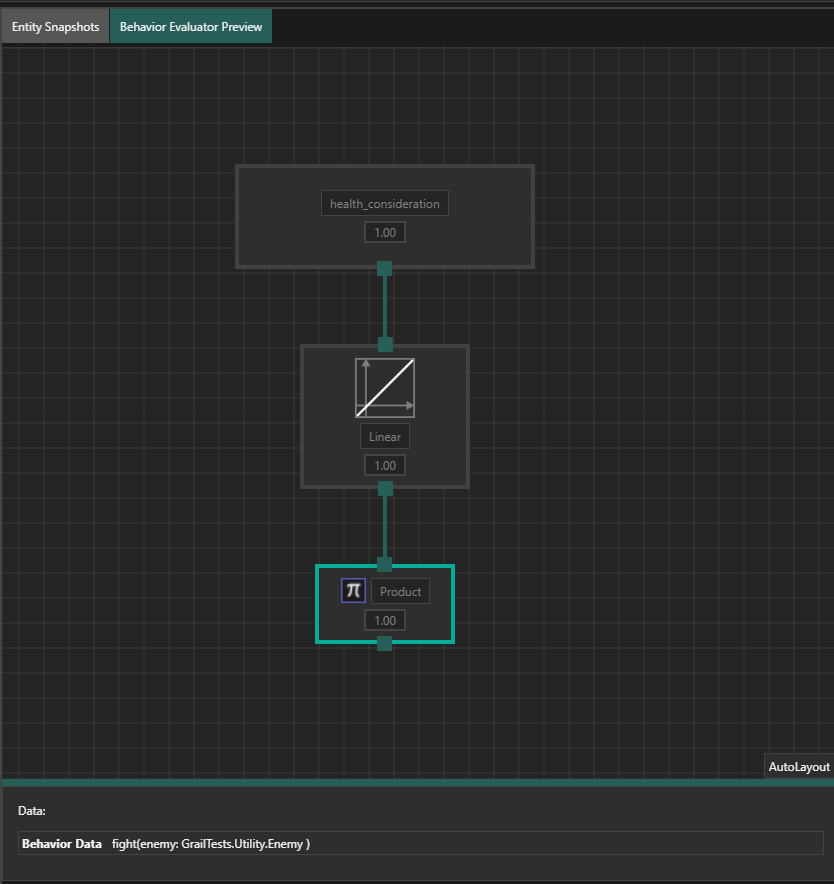

In this panel you can debug how the selected action was evaluated at given timestamp. You can see the considerations, curves and aggregators it uses and the actual values passed as input and output to the respective elements.

The detailed panel shows elements that make up for the utility evaluation flow of a given behavior. The whole process starts with computing values of the considerations. These are inputs about the world. You can then debug how these values "flow" until the final utility score is computed.

In the panel below the preview, the context data (taken from the context blackboard) of the selected behavior instance is displayed.

Example use cases

-

Investigate which behaviors get chosen at particular times

-

Locate undesired situations in which you expected a different behavior

-

Check values of considerations

-

is their logic implemented correctly?

-

maybe the existing considerations are too simple and don’t take something important into account?

-

-

Check what happens with consideration values

-

a curve might be of wrong type

-

a curve might be parameterized incorrectly (see its parameters and bounds in code)

-

-

Look at behavior evaluations

-

if you expected a different outcome, then some of them will probably score too high or too low

-

maybe you should change the evaluator type

-

maybe there are not enough distinct instances of a particular behavior

-

maybe the best option is to introduce more considerations

-

maybe one behavior is always assigned the highest score

-

maybe particular behaviors are changed too often? increase

persistence

-