Simulated Games: offline learning

The motivation and purpose

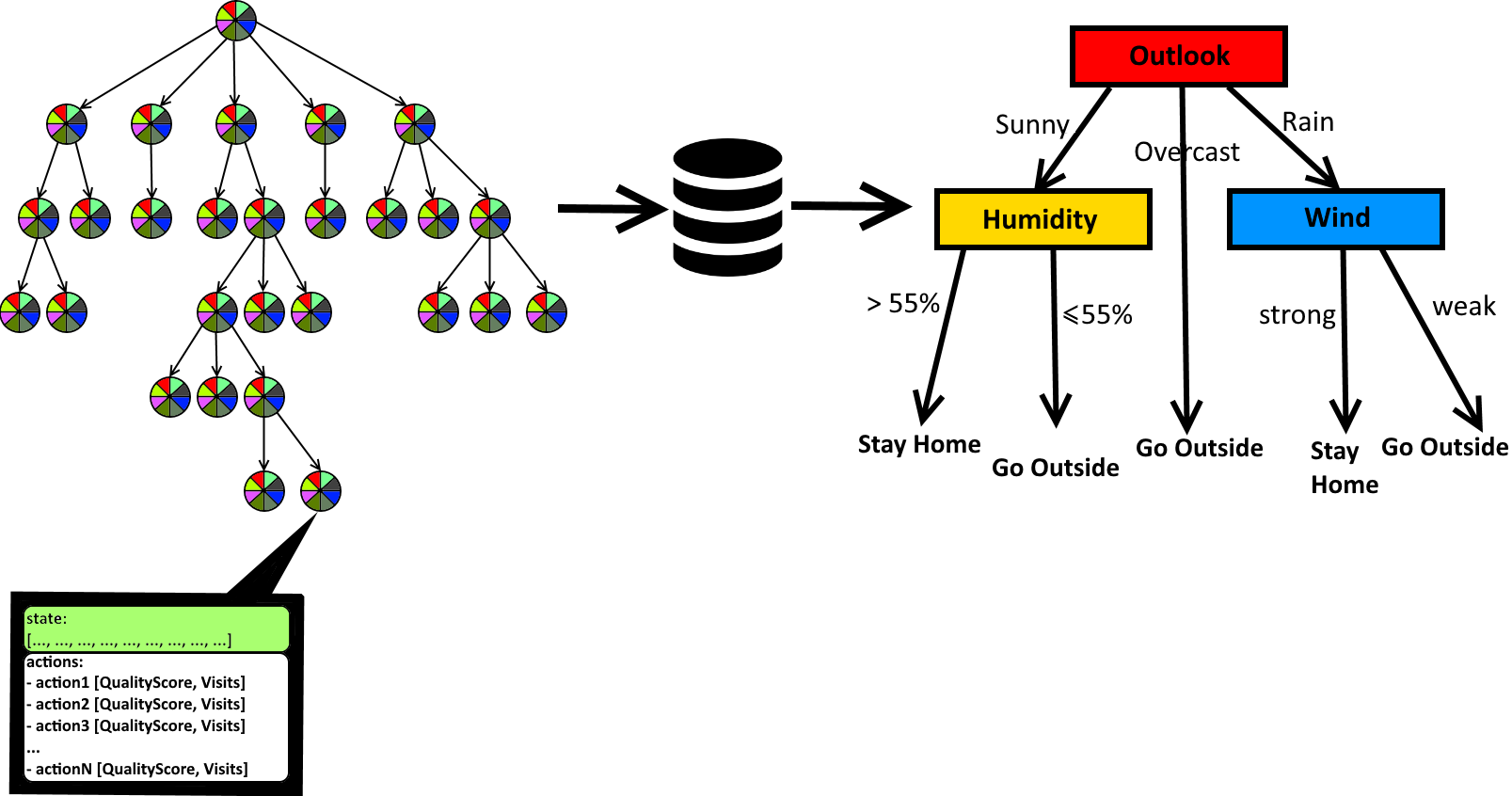

As stated in Performance Considerations, the offline learning approach is suggested in situations, when simulations of the game take too much time. The MCTS algorithm is relatively computationally expensive and sometimes the method requires too many simulations we can afford while maintaining smooth framerate and interactive gameplay. The idea is to relax time constraints and run the MCTS algorithm in the so-called offline mode to gather statistics about the actions and then transform the obtained knowledge into some kind of representation that is fast to use in a shipped game.

-

offline mode - simulations are executed during the development of the game. No human players should be involved in the game in this mode. Bots may think about actions to choose for longer time. Therefore, games may take very long time to complete.

-

online mode - the mode, in which algorithms run in the final (shipped) game. Severe performance considerations apply.

Even if the learning process takes hours or even days, the players would not even notice as the game will be shipped with pretrained algorithm. The fast algorithm of choice is Decision Tree. This section explains how to set up the MCTS algorithm with the Simulation Games module to train and construct a decision tree automatically.

What decision tree is

Decision Tree is a tree-like model commonly used in decision support. It is one of the simplest machine learning models, especially useful for tabular data. The tree is a graphical representation of IF(…) THEN(…) sequences (internal nodes) which are terminated with decision (leaf nodes).

In Grail, decision trees are very specific:

-

They inherit from

SimulatedGameHeuristic, so they can be used not only as a stand-alone structure, but also inside Simulated Games as the heuristic action selector for one or more units. -

The decisions in the tree are specializations of the

ISimulatedGameActionclass -

Internal nodes perform tests only on parameters of type float

-

The parameters can either be

NOMINALorNUMERIC-

NOMINALparameters are categorical. For each unique value of the parameter there will be a separate node in the decision tree. Think of them as tests "IF(parameter == value)". Because the parameters are floating point numbers, there is a tolerance for equality test (by default = 0.02f). -

NUMERICparameters are continuous. They assume that the parameter’s domain is somewhat continuous and there exists the best split value. Nodes that correspond to numeric parameters will only have two children: "IF(parameter < = splitValue)" and "IF(parameter > splitValue)"

-

Preparation

The offline learning approach is an experience-driven training session that results in construction of decision tree. The MCTS algorithm performs actions and gather statistical evidence about the quality of those actions under certain circumstances, i.e., state they were performed in. In order to convert the internal statistics gathered by MCTS/SimulatedGame approach to a decision tree you are asked to provide a set of NOMINAL or NUMERIC paramters that become the basis for decisions. We will call them considerations.

Considerations, similarly to Utility System, are selected parameters that can be measured in a given state of the game. They should resemble the most important factors that affect players' decisions.

| Shooter game example: assume that there are actions such as [engage, flee, find cover, find ammo, find medkit]. Considerations can be [myHP, closestEnemyHP, myAmmo, myWeaponStrength, enemyWeaponStrength, angleMeEnemy]. |

Although, considerations for the offline learning are always float values, they are interpreted in a different way depending on whether you set them as NOMINAL or NUMERIC . NOMINAL considerations mean that each unique value is taken into considerations (as in the case of the switch statement). NUMERIC considerations mean that there exists some theoretical threshold value that will affect decision - for example: IF(myHP > 50) THEN engage.

The quality of the resulting tree mostly depends on two factors:

-

How accurate considerations are chosen. Do they generalize the state well?

-

How well the possible situations of the game are covered in simulations. Are most possible situations simulated?

Because of 2., it is often a good idea to prepare training scenarios. Each scenario can show the SimulatedGame as many various situations as possible. Data gathered from different training scenarios should be summed together with equal share. We will explain how to do this in section "Gathering data".

Vectorizer

After you setup the simulated game, the only creative thing left to do is to provide implementation of one simple interface: IVectorizer.

-

C++

-

C#

struct IVectorizer

{

virtual bool IsLearnableSituation(const ISimulatedGameUnit& unit) const = 0;

virtual std::vector<float> Vectorize() const = 0;

virtual ~Vectorizer() {};

};public interface IVectorizer

{

bool IsLearnableSituation(in ISimulatedGameUnit unit);

IEnumerable<float> Vectorize();

}The first method, IsLearnableSituation(), informs the MCTS algorithm when to learn. You have the option to construct and use the decision tree only for certain aspects (e.g. resource allocation, build orders) and leave the remaining situations in the game to the MCTS.

The same IVectorizer object is used to construct a decision tree and use it later. If you limit learning to certain situations, then the decision tree will be capable of providing the actions in those situations.

|

The second method, Vectorize(), must return the current values of the considerations. It will also be used later by decision tree to determine the context for an action to make.

As already mentioned, the considerations are some measurable factors based on which the action is chosen.

| Make sure you have access to objects that are required to properly vectorize the state. It is suggested to either have the vectorizer object store references to game entities or make your main game-state object implement this interface. |

Running offline learning

There is no separate "Run" method for offline learning. The only thing you need to do is to add at least one OfflineLearner object to a simulated game unit.

The OfflineLearner object accepts IVectorizer as the only argument.

You do not inherit from the OfflineLearner class. Just use it.

|

You may think that we could have hidden creation of the OfflineLearner object behind the scenes, but it also tells the algorithm which units train together the same decision tree.

A decision tree will be built based on what the OfflineLearner will learn. Think of a decision tree as of a model of action selection. If multiple units should share the same action selection model (e.g. they are of the same type), then just provide the same OfflineLearner object to them. This way all those units will add to the learning process and there will likely be more training samples to build the decision tree.

-

C++

-

C#

SimulatedGame game(...);

auto learner = std::make_shared<OfflineLearner>(std::move(myVectorizer));

player1->offlineLearners.push_back(learner);

player2->offlineLearners.push_back(learner);

game.AddUnit(std::move(player1));

game.AddUnit(std::move(player2));

...

game.Run(maxMiliseconds, maxIterations);SimulatedGame game = new SimulatedGame(...)

...

game.AddUnit(player1);

game.AddUnit(player2);

...

OfflineLearner learner = new OfflineLearner(myVectorizer);

player1.OfflineLearners.Add(learner);

player2.OfflineLearners.Add(learner);

game.Run(maxMiliseconds, maxIterations);-

C++

-

C#

player1->offlineLearners.push_back(std::make_shared<OfflineLearner>(std::move(myVectorizer)));

player2->offlineLearners.push_back(std::make_shared<OfflineLearner>(std::move(myVectorizer)));

game.Run(maxMiliseconds, maxIterations);...

player1.OfflineLearners.Add(new OfflineLearner(myVectorizer));

player2.OfflineLearners.Add(new OfflineLearner(myVectorizer));

game.Run(maxMiliseconds, maxIterations);

In C++, it is important that your OfflineLearner object is not used after your SimulatedGame object goes out of the scope. The OfflineLearner references data owned by the SimulatedGame object. Make sure it is not used when SimulateGame is no longer valid. You can also obtain data from OfflineLearner in a self-contained form independent of the SimulatedGame object. The next section shows how to do it.

|

Gathering data

You run simulations of the game in the normal way - exactly as you would without offline learning.

However, if at least OfflineLearner object was added to a unit, you can gather data it has learnt.

In order to do that, call the GetSamplesDataset() method on OfflineLearner.

-

C++

-

C#

//assume that player1 is a unit defined in a game

auto sampleDataset = player1->offlineLearners[0]->GetSamplesDataset();

// the auto type is std::unique_ptr<UniqueTreeDataset>//assume that player1 is a unit defined in a game

var sampleDataset = player1.OfflineLearners[0].GetSamplesDataset();

// the var type is UniqueTreeDataset<ISimulatedGameAction>You get a container for data called UniqueTreeDataset.

It is called unique, because it contains only unique vectors of consideration values and aggregated statistics for this vectors. Statistics are aggregated for all actions that were chosen when those considerations values occured.

The GetSamplesDataset() method accepts one parameter which is the learning threshold from [0, 1]. We recommend using values from 0.5 to 0.9. The default value is 0.7, which is a safe bet. It means that only the statistics from states that were visited in at least 70% of simulations will contribute to learning. This always includes the root node of the MCTS tree (the starting state) and possibly some of its children.

|

Decision trees are often created with datasets that contain duplicate rows. We will explain how to do this with the Dataset class. However, it is recommended not to duplicate data rows from a single Simulated Game run.

The premise of UniqueTreeDataset is that the aggregated statistics will be better quality if only the best action (weighted by the number of occurences) for a unique set of consideration values is used for training from a single game run.

// [MyHP, MyWeaponStrength, EnemyHP, EnemyWeaponStrength, Angle][Actions]

[50, 25, 100, 0, 0.2][STATISTICS OF ACTIONS]You can merge data from multiple UniqueTreeDatasets. In such a case, if a particular vector of considerations values exists in both datasets, the statistics of actions will be properly merged.

-

C++

-

C#

std::unique_ptr<UniqueTreeDataset> sampleDataset = player->offlineLearners[0]->GetSamplesDataset();

//... another batch of simulations....

player->offlineLearners[0]->FillSamplesDataset(*(player->offlineLearners[0]->GetSamplesDataset());UniqueTreeDataset<ISimulatedGameAction> dataset1 = player.OfflineLearners[0].GetSamplesDataset();

//... another batch of simulations....

player.OfflineLearners[0].FillSamplesDataset(dataset1);When you are ready, just convert UniqueTreeDataset to Dataset using the ConvertToDataset method.

The Dataset allows training of DecisionTree.

This is the moment when you have to declare how to intepret the consideration values that were gathered using the Vectorize method of the IVectorizer object.

As discussed in the "What decision tree is'' section, they can be either nominal or numeric.

-

C++

-

C#

std::unique_ptr<UniqueTreeDataset> sampleDataset = player->offlineLearners[0]->GetSamplesDataset();

auto finalDataset = sampleDataset->ConvertToDataset(DTConsiderationType::NOMINAL, 2); //this repeats the type 2 times; another way of writing (DTConsiderationType::NOMINAL, DTConsiderationType::NOMINAL)UniqueTreeDataset<ISimulatedGameAction> sampleDataset = player.OfflineLearners[0].GetSamplesDataset();

Dataset<ISimulatedGameAction> finalDataset = sampleDataset.ConvertToDataset(DecisionConsiderationType.NOMINAL, DecisionConsiderationType.NOMINAL);At this point, the statistically best action will be determined, so the dataset from the previous example may look as follows:

// [MyHP, MyWeaponStrength, EnemyHP, EnemyWeaponStrength, Angle][Decision]

[50, 25, 100, 0, 0.2][SHOOT-ACTION]In addition, Dataset allows for repetitions.

The rule of thumbs are:

-

Have one dataset per each training scenario

-

Add samples from datasets coming from different scenarios together

-

Use the resulting dataset to train the decision tree

This way, the results are averaged through training scenarios. If the best action is not always the same for particular vector consideration values, then the one that was the best in most scenarios will likely be the one advised by decision tree.

Differences between OfflineLearner, UniqueTreeDataset and Dataset

If you are curious why there are three objects for data storage - because they differ and they are to enable flexible learning scenarios. Just to recap:

-

OfflineLearner - can be attached to units in SimulatedGame and shared among any number of them. It stores internal MCTS nodes that correspond to states in which the

IsLearnableSituationmethod ofIVectorizerreturnedtruetogether with vectorization of those states. TheOfflineLearnerexists in the context of aSimulatedGame. -

UniqueTreeDataset - it can be regarded as a dictionary. As keys it has vectorized states i.e. unique vectors of floats (

std::vector<float>inC++,IEnumerable<float>inC#). For the equality comparison, we only use 2 decimal places. As values, the dictionary stores the statistics of actions chosen in the states aggregated by keys. -

Dataset - during conversion from

UniqueTreeDatasettoDataset, the best action is determined and the rest of statistics are no longer used. The dataset contains vectorized states with just one action (the best one in the moment of conversion). ADatasetmay contain duplicates. After all, the actions may have come from similar situations of different games. During the learning process, it is benefifcial to have as many samples as possible, with duplicates too, to avoid overfitting.

Training: creating decision tree

This is a very simple procedure. First, create a new decision tree passing the IVectorizer object that was used to generate training data.

Once you have a dataset object containing all the training data, call the Construct method of the tree passing the dataset.

-

C++

-

C#

DecisionTree tree(std::make_unique<MyVectorizer>());

tree.Construct(*dataset);

tree.Print();DecisionTree tree = new DecisionTree(vectorizer);

tree.Construct(dataset);

tree.Print();| The print method is for debugging/diagnostic purposes. It display raw values as returned from the Vectorize method of IVectorizer. |

You can make printing of the decision tree more readable by specyfing names for the columns corresonding to data returned by the vectorizer. For example, let’s assume that you have a very simple AI unit that kites the enemy, e.g. based on the distance either attacks or runs away to attack later. Let’s assume that we have done many simulations and trained the decision tree to find the proper distance for the condition to distinguish between the action to make.

We can make the tree print this:

Distance <= 24.4529

Decision: Run away from

Distance > 24.4529

Decision: Jump and attack

You can do this with the following methods, setting the 0-th column name to "Distance":

-

C++

-

C#

//DecisionTree.hh

/// This will make the Print() function output the name instead of Column[columnIndex]. This name does not affect anything else.

void SetColumnName(int columnIndex, const std::string& name);

/// This will make the Print() function output the names instead of Column[columnIndex]. This name does not affect anything else.

void SetColumnNames(const std::initializer_list<std::string>& consecutiveNames);//DecisionTree.cs

/// This will make the Print() function output the name instead of Column[columnIndex]. This name does not affect anything else.

public void SetColumnName(int columnIndex, string name)

/// This will make the Print() function output the names instead of Column[columnIndex]. This name does not affect anything else.

public void SetColumnNames(IEnumerable<string> consecutiveNames)Using decision tree

A decision tree implements the generic ISimulatedGameHeuristic interface introduced in Step 8 of Simulated Games - defining a game in 10 steps.

Therefore, you can use it by simply adding it to heuristic reasoners of a unit.

-

C++

-

C#

player->heuristicReasoners.push_back(decisionTree) //decisionTree is of unique_ptr typeunit.HeuristicReasoners.Add(decisionTree);The decision tree maintains its own private reference to the IVectorizer it was learnt with. It is used to decide whether, in a given situation, the unit should make an action suggested by a decision tree (IsLearnableSituation returns true) or by the regular MCTS algorithm. The IVectorizer object is also used to provide consideration values - input to the decision nodes.

You can use the same decision tree for multiple units as long as it makes sense. It makes sense if units share the same actions and considerations. In such a case, typically the IVectorizer computes values of consideration in a unit-context-dependent way.

|

*If you want to see a complete working example of the offline learning process please examine this HOW-TO.

Serialization & deserialization

You can serialize and deserialize datasets and decision trees in either text or binary mode.

In either case, you need to provide a serializer object for your actions - IDecisionStringSerializer or IDecisionBinarySerializer for text and binary serialization, respectively. All the rest can be serialized automatically. The action serializers are game specific and they are required by their paired serializer objects for the whole decision tree DecisionTreeStringListSerializer and DecisionTreeBinarySerializer, respectively.

Using action serializer object is pretty straightforward - please consult the API reference.

Decision tree serialization & deserialization (text)

-

C++

-

C#

//Text serialization

DecisionTreeStringListSerializer<ISimulatedGameAction> serializer(std::make_unique<MyDecisionStringSerializer>());

tree.Serialize(serializer);

//You can access the serialized text data

std::vector<std::string> lines = serializer.GetSerializedStrings();

//You can write data to file manually or using the DecisionTreeStringListSerializer method:

serializer.WriteToFile(filename);

//You can populate the text representation manually

serializer.SetSerializedStrings(lines);

//If you serialized the tree using DecisionTreeStringListSerializer and wrote data to file, you can read it:

serializer.ReadFromFile(filename);

//You can create the tree from text representation

tree.Deserialize(serializer);//Text serialization

DecisionTreeStringListSerializer<ISimulatedGameAction> serializer = new DecisionTreeStringListSerializer<ISimulatedGameAction>(myTextDecisionSerializer);

tree.Serialize(serializer);

//You can access the serialized text data

string[] lines = serializer.GetSerializedStrings()

//You can write to stream e.g. to a file

StreamWriter writer = new StreamWriter(filename);

serializer.WriteToStream(writer);

writer.Close();

serializer.Clear(); //clear data or create a new object

//You can populate the text representation manually

serializer.SetSerializedStrings(lines);

//You can read from stream e.g. from a file

StreamReader reader = new StreamReader(Filename);

serializer.ReadFromStream(reader);

reader.Close();

tree.Deserialize(serializer);It is important to note that the Serialize method of the DecisionTree takes a serializer as parameter and populates it with the serialized data - text or binary depending on the type of serializer used. After this, the serializer object will hold the encoding of the tree inside. Therefore, there is no serialization to the disk, by default. Serialization to the disk is optional and can be done in a separate step.

In the same spirit, the Deserialize method of the DecisionTree will create the tree based on the internal state hold by the serializer.

Decision tree serialization & deserialization (binary)

-

C++

-

C#

//Serialization to file example

std::ofstream ofs;

ofs.open(fileName, std::ios_base::out | std::ios::binary | std::ios::trunc);

DecisionTreeBinarySerializer<ISimulatedGameAction> serializer(std::make_unique<MyDecisionBinarySerializer>(), &ofstream);

tree.Serialize(serializer);

ofs.close();

//Deserialization from file example:

DecisionTree tree2(std::make_unique<MyVectorizer>(...));

std::ifstream ifs;

ifs.open(fileName, std::ifstream::in | std::ios::binary);

DecisionTreeBinarySerializer<ISimulatedGameAction> deserializer(std::make_unique<MyDecisionBinarySerializer>(...), &ifs);

tree2.Deserialize(deserializer);

ifs.close();//You can serialize using BinaryWriter or to MemoryStream. We will show the first case:

BinaryWriter writer = new BinaryWriter(new FileStream(filename, FileMode.OpenOrCreate));

DecisionTreeBinarySerializer<ISimulatedGameAction> serializer = new DecisionTreeBinarySerializer<ISimulatedGameAction>(new myBinaryDecisionSerializer(),writer);

tree.Serialize(serializer);

writer.Close();

//You can deserialize using BinaryReader, from MemoryStream or from raw byte data (byte[])

DecisionTree tree2 = new DecisionTree(myVectorizer);

BinaryReader reader = new BinaryReader(new FileStream(filename, FileMode.Open));

DecisionTreeBinarySerializer<ISimulatedGameAction> serializer = new DecisionTreeBinarySerializer<ISimulatedGameAction>(new myBinaryDecisionSerializer(),reader);

tree2.Deserialize(serializer);

reader.Close();Dataset serialization & deserialization

Dataset serialization is useful if you want to break the offline process into sessions. You can store the results and return to them later.

-

C++

-

C#

//In C++ you have to serialize dataset manually in your preferred way

dataset->samples // you can access the samples

//You can access sample data, e.g.

dataset->samples[0]->data

//You can also use method

dataset->samples[0]->ToString(/*IDecisionStringSerializer<ISimulatedGameAction>&*/ actionSerializer)//In C#, this is very simple:

dataset.WriteToFile(textDecisionSerializer, streamWriter);

dataset.ReadFromFile(textDecisionSerializer, streamReader);

//You can also serialize the content manually

dataset.SamplesComplete example

You can find a basic complete example in Offline Learning: Quickstart